Convergent Design

Pitch

Although accessability functions such as screen readers exist for most mobile and web browsers, the experience of using a social media app is still very clunky for the visually impaired. For independent visually impaired people, this leads to being unable to connect easily with close friends and family, leading to extensive isolation which can be devastating for mental and physical health.

VisiLink aims to create a social media app that is built primarily for visually impaired users and friends/family that employs simple gesture-based navigation for easy use, while including a slew of optional accessibility concepts such as automatic Image Descriptions, Speech Detection, Intertwined Text and Audio, and Audio Notifications. The user experience for a non visually impaired user will be entirely normal, to reduce the amount of habitual changes a friend or family member may make, while the app will handle translation into audio and text for the visually impaired user, allowing for a seamless experience for both groups. For example, not everyone may be able to listen to speech recordings in public spaces, or be attentive about the content that's being sent.

A complete version of VisiLink will include location based alerts and navigational assistance to bring the online experience offline- allowing users to connect offline more easily. This poses extra challenges and ethical questions, so is currently out of scope for VisiLink, but may be pursued in the future. Additionally, VisiLink will create "forums" of sorts that allow content browsing and targeted content categories to complete the social media experience.

Functional Design

Concepts

- User

- purpose allow users to authenticate themselves, creating individual accounts that contain all data

- principle after a user registers with a username and password, authentication with the selected username and password will become possible.

- actions

- register: if username is not already registered, store credentials and add user to set of all users

- login: given username and password, return user with matching credentials, or an error

- logout: deauthenticate user, return to base state of application.

- state

- Users: set User

- Logged in/out: Users -> one Hash

- Profile [User, Content, Features]

- purpose store public and private data for owners, with separation for public and private data.

- principle users can display content as well as enable or disable certain features of the application. Can also mark self as visually impaired or not. Former will be public, latter will be private.

- actions

- create: create user's profile with given Content and any feature choices.

- update: update user's profile with given Content and any new feature choices.

- add: add a user to another's profile, linked as "friend".

- state

- profile: User -> one Profile

- features: Profile -> list Features

- display: Profile -> list Content

- Message [User, Content]

- purpose allow direct messages between one user and another.

- principle users can create a message that contains Content, which will be "modified" and delivered to the selected User.

- actions

- send: create a new Message with Content, invoke Captions and Speech Detection depending on recipient's selected features, and mark as sent by Sender.

- receive: mark given Message as received by Recipient, invoke Speech Generation depending on recipient's selected features, and mark Message as completed.

- verify: given an incomplete Message, add to processing queue.

- state

- sent/received: Message -> one User, one Boolean

- content: Message -> one Content

- Caption [Image, Text]

- purpose convert an Image to Caption(Text) using computer vision.

- principle convert an Image to a Caption ready to be used for Speech Generation for a visually impaired recipient.

- actions

- generate: given an image, use API to convert image to text, which is then marked as Caption.

- verify: given a Caption, give user ability to modify or re-generate caption with different seed.

- state

- caption: Content -> set Caption

- Speech Generation [Text, Audio]

- purpose given a Text, convert to Audio using NLP.

- principle convert a Text into Audio, to be played for a visually impaired user.

- actions

- generate: given a Text, use API to convert Text to Audio and mark as Speech Generation.

- verify: given a Speech Generation, give user ability to re-generate with different seed.

- state

- Speech Generation: Text -> one Audio(Speech Generation)

- Speech Detection [Audio, Text]

- purpose given an Audio, convert to Text using NLP.

- principle convert an Audio into Text, to be machine processed and used by other Concepts.

- actions

- generate: given an Audio, use API to convert to Text and mark as Speech Detection.

- verify: given a Speech Detection, give user ability to re-generate with different seed.

- state

- Speech Detection: Audio -> one Text(Speech Detection)

App-level Synchronizations

include User

include Profile

include Message

include Caption

include Speech Generation

include Speech Detection

sync register(u,p: Text) register(u,p: Speech Detection)

when User.register(u,p) return user

else return error

sync register(u,p: Text) register(u,p: Speech Detection)

when User.register(u,p) return user

else return error

sync create(user: User, content: Content, features: Features)

Profile.create(user,content,features)

sync update(user: User, content: Content, features: Features)

Profile.update(user,content,features)

sync add(sender: User, recipient: User)

Profile.add(sender,False)

sync accept(sender: User, recipient: User)

Profile.add(sender,True)

sync message(sender: User, recipient: User, content: Content)

when Message.send(sender,recipient,content) output Verify(Message)

when Message.receive(sender,recipient,content) output SpeechGeneration.Message

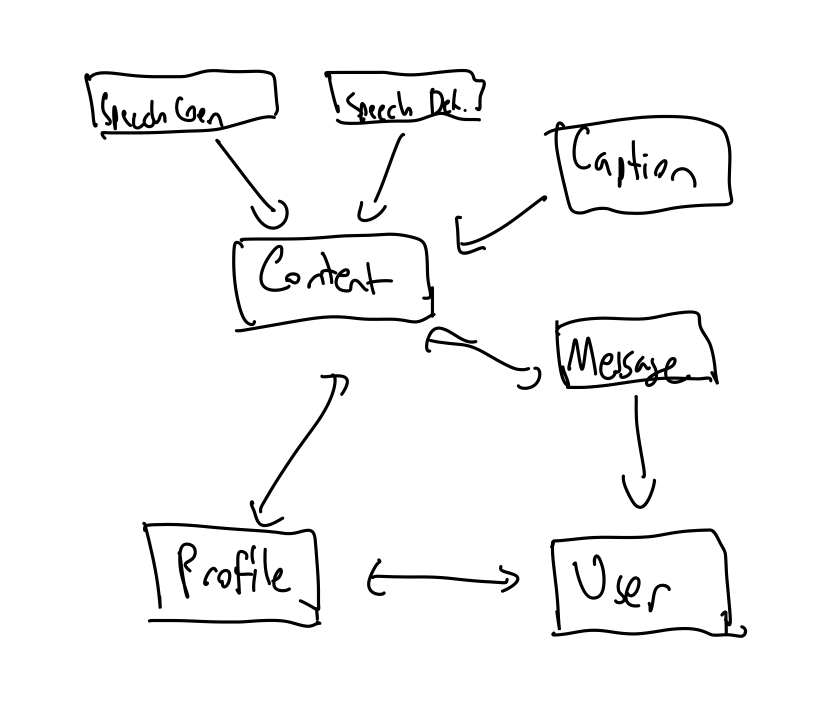

Dependency Diagram

Wireframes

Design tradeoffs

Audio and Speech Scope

- options I had multiple options regarding how to incorporate Speech/Audio based features into VisiLink. This included audio notifications, speech to text conversion, text to speech conversion, and a plethora of features that'd utilize these basic low-level features.

- rationale I decided to entirely remove audio notifications and features that'd depend on it to decrease the scope of this project. There was simply not enough time to properly implement notifications and all the bells and whistles such as location tracking, audio assisted navigation, etc.

Verification: Reliance on TTS Accuracy

- options Text to speech is not always accurate, and same for speech to text. I had to choose whether or not to incorporate re-generation and confirmation tasks which may make the user experience clunky, or to entirely rely on TTS and STT and not give the user the ability to verify their message/command.

- rationale After weighing the consequences of an incorrect command/message, I realized that sending a message twice and deleting the previous one was more work for the user than simply allowing the user to listen to their text conversion and verify whether or not to send. The cancellation and verification feature was also easily implementable using gesture navigation, so I chose to add the verification, though it may slow down texting speeds.

Profile, posts, and messages

- options The scope of VisiLink for now is to allow quick and easy messaging between a visually impaired user and a non-visually impaired user. The features for the visually impaired user would work for a visually impaired-visually impaired user conversation. However, VisiLink lacks a lot of functionality for not visually impaired users. The options were to implement basic social media functionality such as stories and posts on user profiles, eliminate such features, or add user updatable bits of text to the profile page as a middle ground.

- rationale I chose to add a few boxes for text stories on user profiles to at least allow simple introductions and easier differentiation between users. Users with identical names and similar usernames may be confused with one another, especially if pictures are not possible to be read.